- Blog

- The UV Balancing Act: Under-the-Radar Things to Consider When Balancing Fraud Prevention and User Experience

The UV Balancing Act: Under-the-Radar Things to Consider When Balancing Fraud Prevention and User Experience

Incognia recently hosted a webinar featuring Chelsea Hower of Sittercity and Travis Dawson of StockX to talk about the ever-present problem of balancing the need for user verification and security with the equally topical pressure to maintain the best, most frictionless experience possible for users on both sides of the marketplace.

Subscribe to the Incognia Newsletter

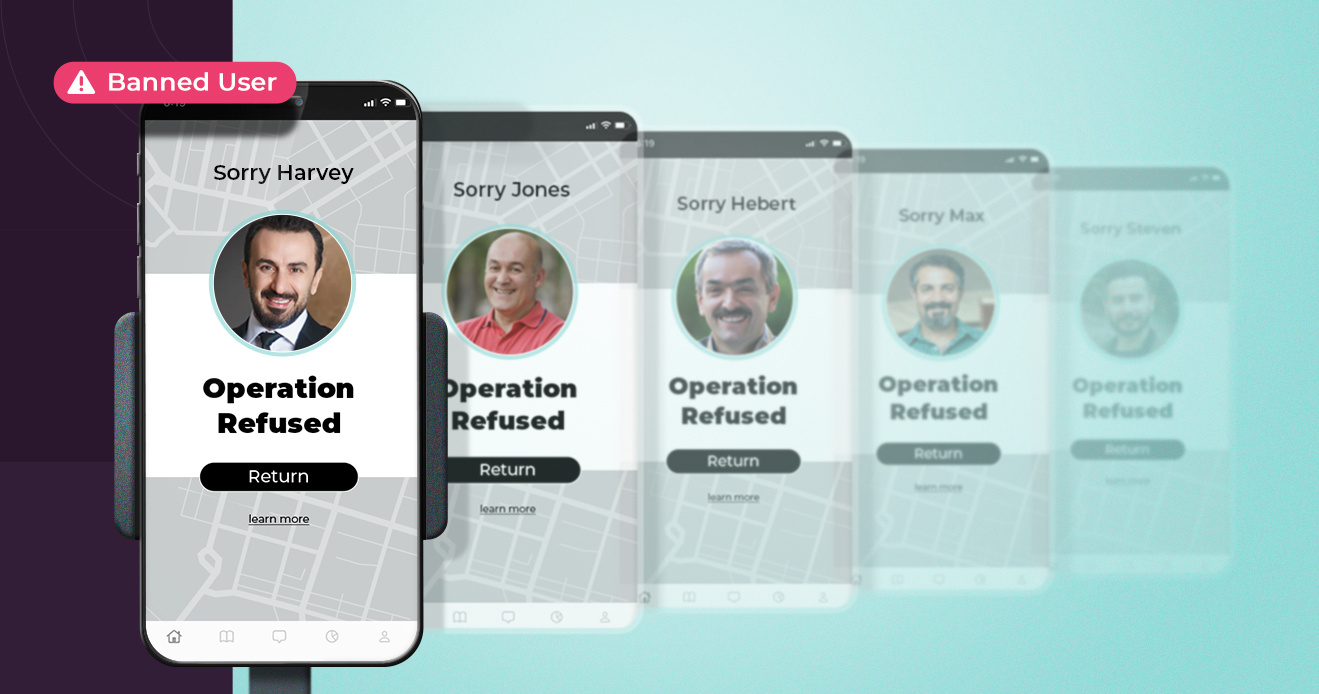

Trust & Safety and user verification are non-negotiable parts of running a successful marketplace app of any kind, but it can be hard to balance wanting the most secure and resilient verification with the most user-friendly. Here are just a few of the most stand-out insights from our recent webinar about this question, “The User Verification Tightrope: Balancing Security and User Experience.”

Incentives to verify can be a better strategy than penalties

Sometimes, you catch more flies with honey than vinegar. Travis Dawson, Senior Director of Product Management for reseller marketplace StockX, explained in our webinar that sometimes, when users are reluctant to go through the mandatory KYC verification they need to cash out of the app, offering incentives for verifying can be a more effective motivator than the threat of not being able to cash out.

Faced with the threat of not being able to pull their money out if they don’t verify past a certain earnings threshold, a lot of users will choose to make a duplicate account and start over. Offered with early deposits in exchange for verifying, however, can sometimes be a strong enough lure to encourage verification.

As Dawson, sometimes a mix of carrot and stick is the most effective way to get seller buy-in for UV completion.

When do you stop trying to verify a user?

Sometimes good users have trouble verifying just from a technical standpoint, and they might need support from your team to finish the job. Unfortunately, fraudsters are just as capable of reaching out to your team as legit users, and they’ll use every excuse under the sun to explain why they can’t verify and try to get manual account approval from a team member.

So, how do you make the call between an exceptionally technologically challenged good user and a bad actor? When in the process do you decide to stop helping a user try to verify? Our speakers say: it depends.

For Chelsea Hower, Director of Trust & Safety at Sittercity, an app that connects babysitters, petsitters, and housekeepers with families, her team tries to help people verify as much as possible, but at a certain point, they have to accept the risk of possibly denying a good user the chance to join the platform.

“We kind of have a process where if we can't pass them through verification, if we try 23 times and it's not working, then we're seeing those red flags start to go up. And it very well may be a legitimate person, but at that point, it's just not a risk that we can take.”

The sensitivity of her user base—both people who entrust a stranger with their children or pets and people who have to enter a stranger’s home to do said watching—means she might have to be more accepting of the possibility of those false positives because of lower risk tolerance. “...For those people who are legitimate users, it is frustrating. And I understand that. But then thinking of it from our perspective and our user base specifically, I think people, even though they're frustrated, they kind of understand.”

For a different perspective, Travis Dawson explained that because their app is global, they actually have been a little more lenient with verification issues rather than less. Different countries will have different systems in place that enable verification, such as ID or address databases, and someone in Hong Kong might not have as easy a time verifying their account as someone in the United States. His team looks for certain risk signals as a part of deciding whether or not to close a ticket.

“If they're doing something obviously silly, like running through a bunch of names and social security numbers or names and National IDs…no, just no. And in some cases we'll let them continue trying. We're not actually doing the verification after X number of tries. It's like you can type in whatever you want at that point, you're going to fail. We're not paying for it anymore, but good luck…You watch them all of a sudden using different devices, and it's great, because we get to collect some of that information for later use…it's not cut and dry. It's how they failed and how many times they tried it.”

Interestingly, for Dawson’s side, fraudsters trying to sign up with stolen or synthetic identity information is more of a feature than a bug. By stopping verification checks but allowing fraudsters to keep burning through their resources, there’s an opportunity to recoup some of the resources spent on that fraudster in the form of intelligence that might be helpful later.

Trust & Safety measures are for vendors and customers alike

In Trust & Safety conversations around marketplaces, there’s usually an emphasis on the risk that the vendor side poses to the buyer side. For instance, in ride-hailing apps, there might be a bias towards talking about rider safety over driver safety, and on product marketplaces, there might be a focus on sellers scamming buyers rather than the other way around. But, as Hower points out, Trust & Safety is about everyone who uses a marketplace.

“...When we're looking at the demand side of the market, we're also looking into processing verification on that side, because it is important to protect all of your users. Sitters are the ones that are watching kids, but they're also going into strangers' homes.”

It’s clear that other verticals in the online marketplace are also thinking about balanced T&S measures. Uber, one of the biggest names in the ride-hailing app business, has a Rider ID feature that requires additional verification from riders that use high-risk payment methods like prepaid gift cards. Starting in 2024, riders will have to provide an ID document to verify, and drivers will have a choice in whether they want to pick up unverified riders or not. The market recognizes that verification is as important on the demand side as the supply side, and the technology is advancing accordingly.

Risk tolerance will fluctuate depending on user base & vertical

Something else to think about is that not every user base is going to require the same stringency. What might be an automatic no go for an app like Sittercity could start as just a red flag for StockX or another app. User base, how much fraud the app is currently facing, and how commonly targeted the app’s market is for fraud are all factors that might influence how much risk a platform can tolerate before they have to say no to a user.

As Dawson succinctly pointed out in the webinar, the answer to the question of how to balance user verification with user experience isn’t a fixed point: not only will it vary from app to app, but from product to product, use case to use case, and even user to user. The best way to strike the balance is a good understanding of your platform’s needs, its risks, and its user base. Like the fight against fraud, the struggle to deliver the best experience possible to users while also keeping them safe is one that’s always going to be around.

The variability of this balance might be a feature instead of a bug, though—imagine if you could only use the strictest or the most lenient of solutions for every single user and transaction type. Walking the tightrope is often difficult, but it also presents an opportunity for fraud prevention teams to push those scales in whichever direction they need, when they need. To hear more of our speakers’ keen insights into the UX/UV problem, you can watch the full recorded webinar here.